Large Language Models Reveal the Neural Tracking of Linguistic Context in Attended and Unattended Multi-Talker Speech

Large Language Models Reveal the Neural Tracking of Linguistic Context in Attended and Unattended Multi-Talker Speech

Puffay, C.; Mischler, G.; Choudhari, V.; Vanthornhout, J.; Bickel, S.; Mehta, A. D.; Schevon, C. A.; McKhann, G. M.; Van hamme, H.; Francart, T.; Mesgarani, N.

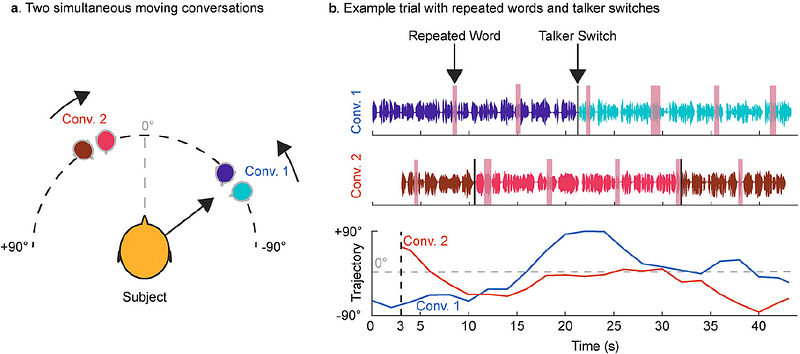

AbstractLarge language models (LLMs) have shown strong alignment with contextualized semantic encoding in the brain, offering a new avenue for studying language processing. However, natural speech often occurs in multi-talker environments, which remain underexplored. We investigated how auditory attention modulates context tracking using electrocorticography (ECoG) and stereoelectroencephalography (sEEG) from three epilepsy patients in a two-conversation \"cocktail party\" paradigm. LLM embeddings were used to predict brain responses to attended and unattended speech streams. Results show that LLMs reliably predict brain activity for the attended stream, and notably, contextual features from the unattended stream also contribute to prediction. Importantly, the brain appears to track shorter-range context in the unattended stream compared to the attended one. These findings reveal that the brain processes multiple speech streams in parallel, modulated by attention, and that LLMs can be valuable tools for probing neural language encoding in complex auditory settings.