Plug-and-Play automated behavioral tracking of zebrafish larvae with DeepLabCut and SLEAP: pre-trained networks and datasets of annotated poses

Plug-and-Play automated behavioral tracking of zebrafish larvae with DeepLabCut and SLEAP: pre-trained networks and datasets of annotated poses

Scholz, L. A.; Mancienne, T. G.; Stednitz, S. J.; Scott, E. K.; Lee, C. C. Y.

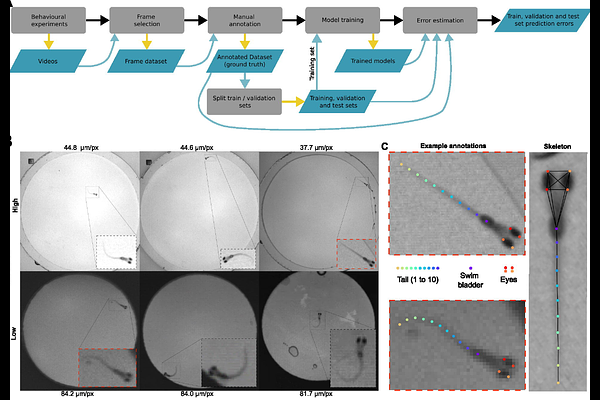

AbstractZebrafish are an important model system in behavioral neuroscience due to their rapid development and suite of distinct, innate behaviors. Quantifying many of these larval behaviors requires detailed tracking of eye and tail kinematics, which in turn demands imaging at high spatial and temporal resolution, ideally using semi or fully automated tracking methods for throughput efficiency. However, creating and validating accurate tracking models is time-consuming and labor intensive, with many research groups duplicating efforts on similar images. With the goal of developing a useful community resource, we trained pose estimation models using a diverse array of video parameters and a 15-keypoint pose model. We deliver an annotated dataset of free-swimming and head-embedded behavioral videos of larval zebrafish, along with four pose estimation networks from DeepLabCut and SLEAP (two variants of each). We also evaluated model performance across varying imaging conditions to guide users in optimizing their imaging setups. This resource will allow other researchers to skip the tedious and laborious training steps for setting up behavioral analyses, guide model selection for specific research needs, and provide ground truth data for benchmarking new tracking methods.